Hi All,

Today we will discuss about which database user can be used at Data Server (Topology) and why. As usual, Oracle database will be used in the example.

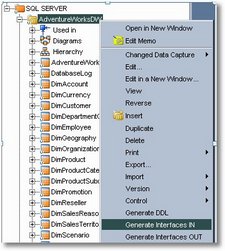

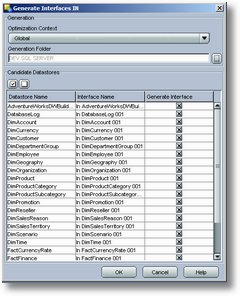

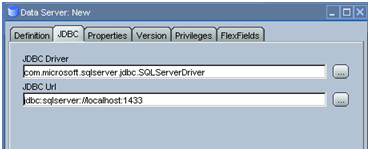

When you start with ODI, one of the firsts things to learn is that is necessary configure database connections to reverse the source and target table.

And what every single beginner does? Configures both Data Servers (source and target) using the owner schema of the source and target tables and, after that in the ODI physical schema, choose the same schema as “Data Schema” and “Work Schema”.

This is a natural behavior once he is a beginner but let us make a simple analysis about what was done from Database point of view.

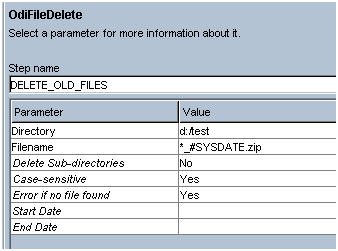

- A new set of tables (C$, I$, E$) were added into database with no planning from DA (Data Administrator)

- A new application is accessing the database by a user that wasn’t created for it

- Thinking about tablespaces and ETL/ELT process a not provided load is happening with no warning to those responsible to manager the database

Some possible effects of this 3 points:

- Developers from other softwares than ODI starts to see ODI tables and could interferer in its working dropping a I$ table that “shouldn’t” be there once it doesn’t belongs to the original schema.

- The amount of allowed connections can be not sufficient for the ODI connections

- Massive data transfers can interferer in the normal working of source and target systems like:

- The target database raise an error about no more Tablespace space, in the Final User GUI at 2:15 PM and a SR to Infra-Structure department is opened

- When the DBA, at 2:30 PM, investigates what is happening, he founds nothing once there is the projected space on database

- Real situation: A massive process create a huge C$ and a huge I$ that used all tablespace (in that moment the Final User got the error) but as the C$ and I$ are dropped at end of execution, everything comes back to the normal. See bellow:

2:00 PM 2:15 PM 2:30 PM Free Tablespace 55 0 55 Normal Use 45 45 45 ODI instantaneous Use 0 55 0

Then what do to avoid all of this problems (and a few more not discussed…)???

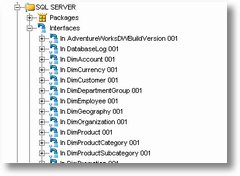

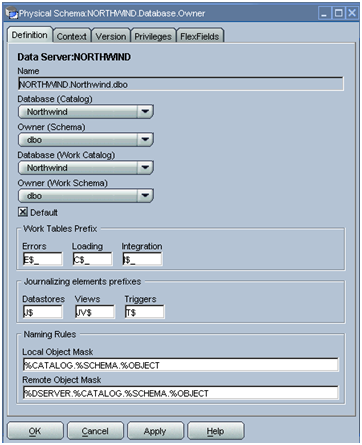

The solution is very simple and the same as always… CREATE A USER JUST FOR ODI! And make it as your Staging Area (Work Schema at Physical Schema).

In this way it will be possible:

- Define a specific tablespace to it

- Total security control once it will be necessary to grant access to any table that needs to be read or updated or selected, etc.

- No interference with systems already established

- Less ODI objects once only one Data Server (Topology) by database will be necessary.

- Centralized control of database objects created by ODI once they will be under the same owner/schema

These are some advantages between others!

Well folks, I hope to have helped in the understanding of a “Best Practice” in ODI Administration.

One small tip:

– Print this post and take it to the DBA from your environment, I can assure you that a lot of questions and problems will be avoid when ODI starts… 😉

As always, comments are very welcome.

Cezar Santos