Hi Friends,

It’s a post about one of the most questioned subjects that we receive.

I hope you all enjoy once it’s a very detailed one….

CDC consistent is a concept where Journalizing is carried at the Model level . As you all are aware of the point that in ODI CDC are of two types namely Simple and Consistent. We have already covered the Simple part early . You can visit this link for simple CDC steps. –http://odiexperts.com/changed-data-capture-cdc

CDC Consistent is great when we have tables in a schema related to each other via PK – FK relationship, as CDC Consistent are applied on the Model rather than on the Data store level as practiced in Simple.

Please find the step by step instruction of initial set up of CDC Consistent

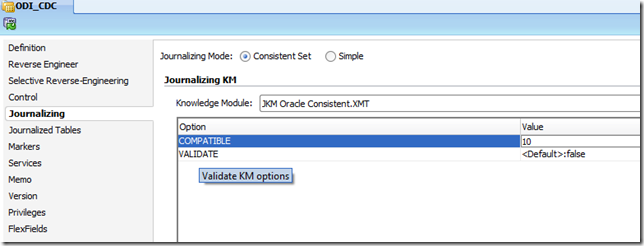

Step 1. Edit the Model and select the appropriate Consistent Journalizing mode and select the appropriate Consistent KM.

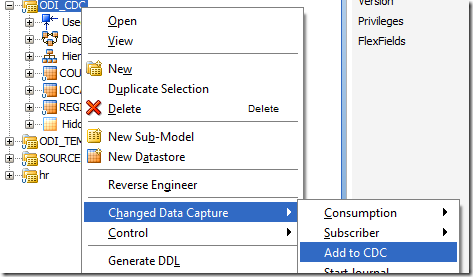

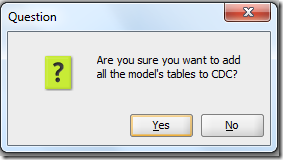

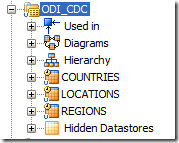

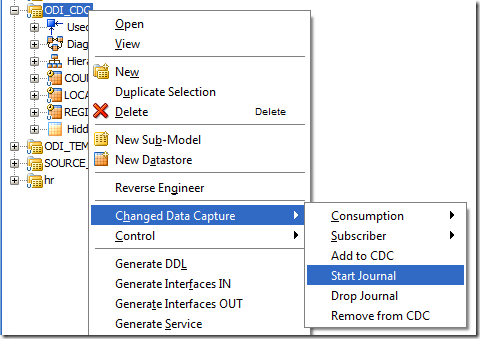

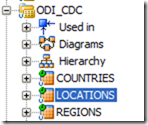

Step 2. Add the data stores into CDC.

As you can see the all the data stores will have the orange clock shaped icon.

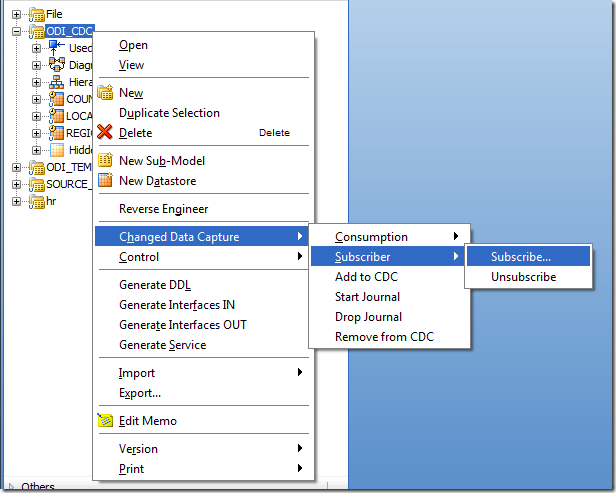

Step 3. Add the subscriber(s)

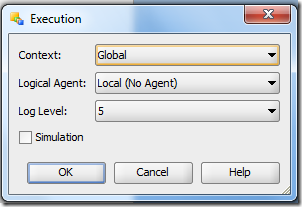

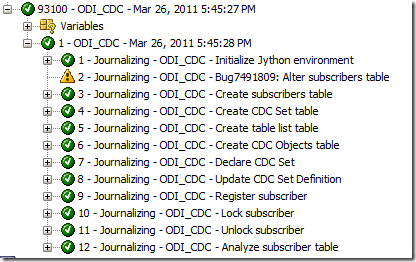

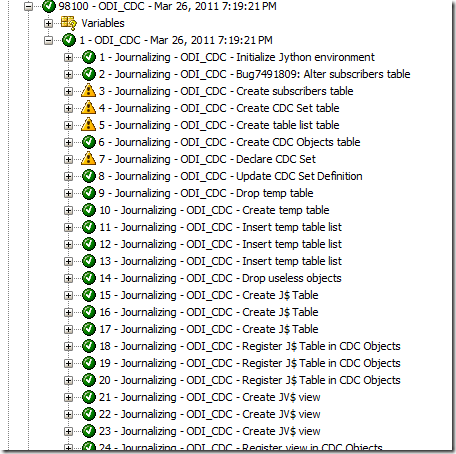

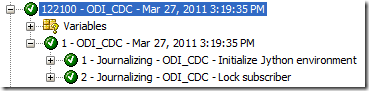

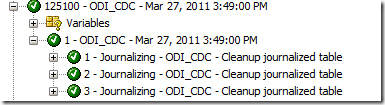

Step 4– Start the Journal

Once the Journalizing is successful the Orange clock icon will change to Green icon.

Testing the CDC Consistent on various scenarios.

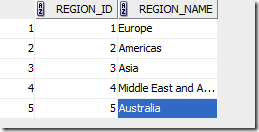

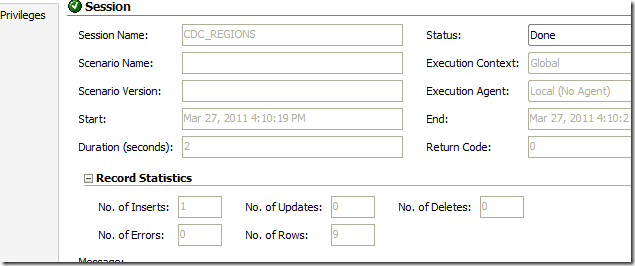

Simple Insert is being carried at the Source Table.

Data being shown at the Journalizing data option.

Lets see if the Journalized data gets flow through.

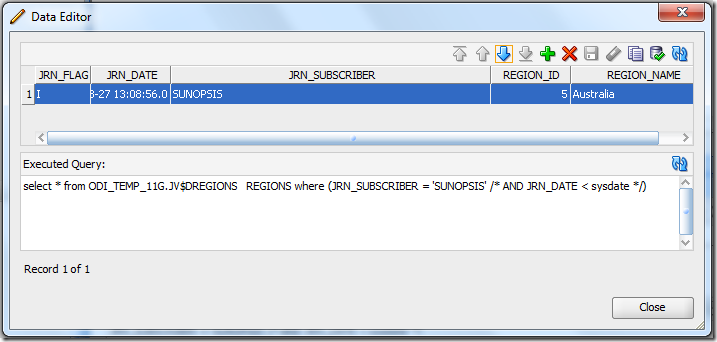

The records does not seems to come through . Let’s see the issue or the real reason behind it.

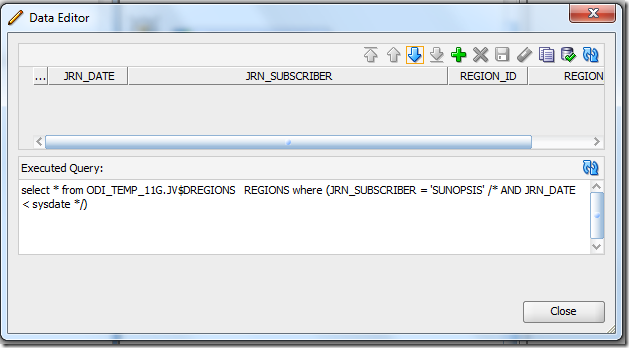

The source table for the journalizing is reading from the JV$ view, where as the data seems to be reading from the JV$D view.

The real reason behind why ODI reads from the JV$ rather than JV$D is that JV$ captures all the records from the JV$D by locking the subscriber.

Consider this example say at 10 pm there are 3 Inserts , 5 Updates and 1 deletes are happening at the source side and thus gets captured in the respective JV$D views .

We apply the Extend windows and Lock Journalize table and all the records in the JV$D gets captured into JV$.Now at that time there is again a new 1 insert and so gets captured in the JV$D views. Since there is lock in the subscriber table . New data will not be captured into JV$ .

Let’s repeat the necessary steps and rerun the interface.

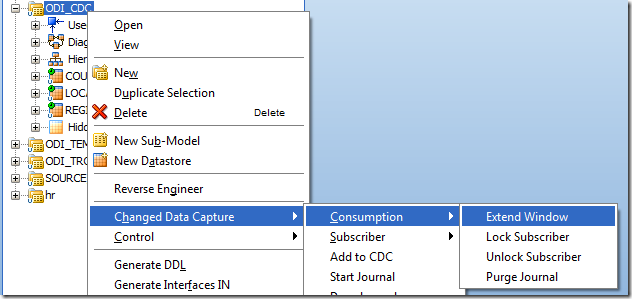

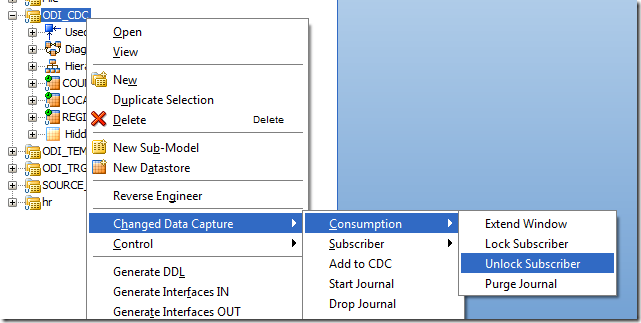

Step 1. Extend Window

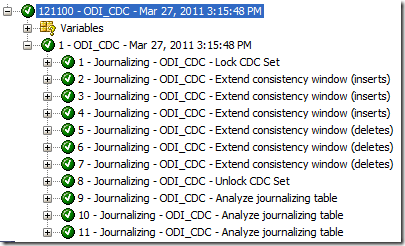

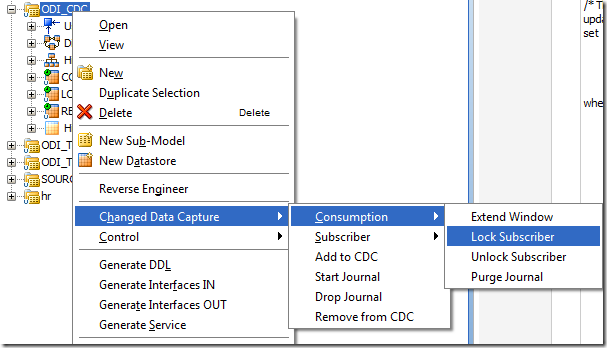

Step 2. Lock Subscriber

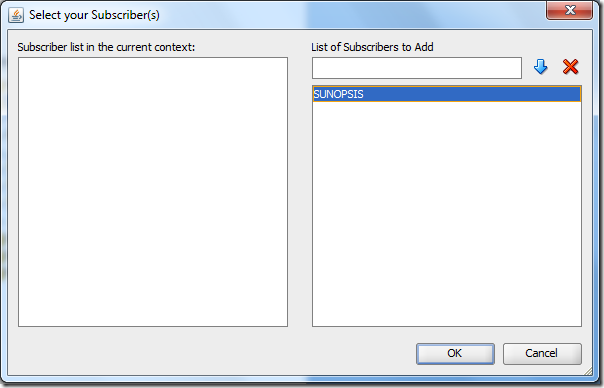

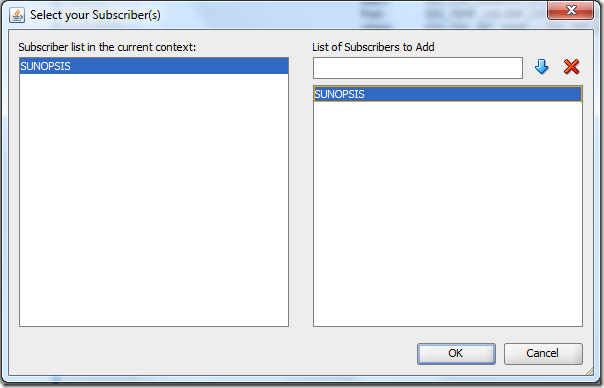

Select the right subscriber

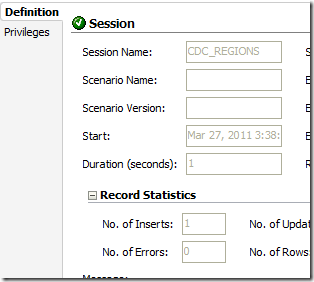

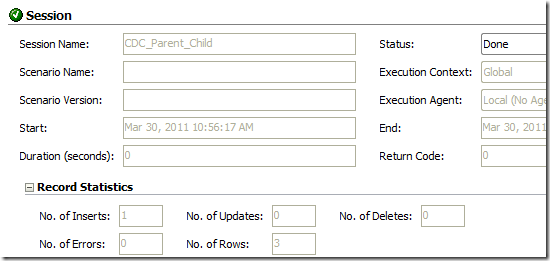

Step 3– Run the interface

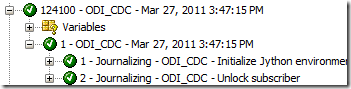

Step 4 – Unlock Subscriber

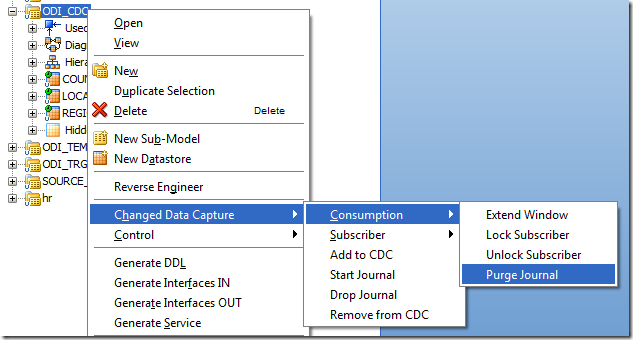

Step 5 – Purge Journal

Once the data is been captured the journal is purged.

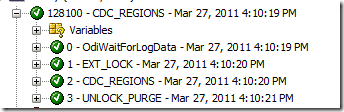

Lets see how we can process the above steps via Package.

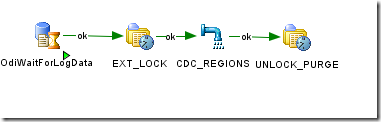

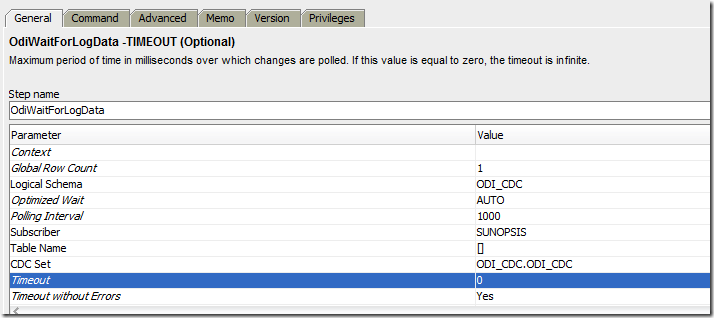

Step 1 – OdiWaitforLogData

Apart from the default setting provide the Logical Schema and either provide the table name or the CDC Set.

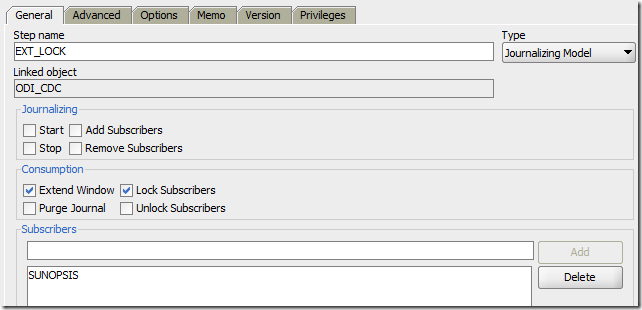

Step 2 – Extend and Lock Subscribers the model

Drag and drop the Model into the Package and in the Type select Journalizing Model

and check mark Extend windows and Lock Subscribers

Step 3– Interfaces

Now call the interfaces.

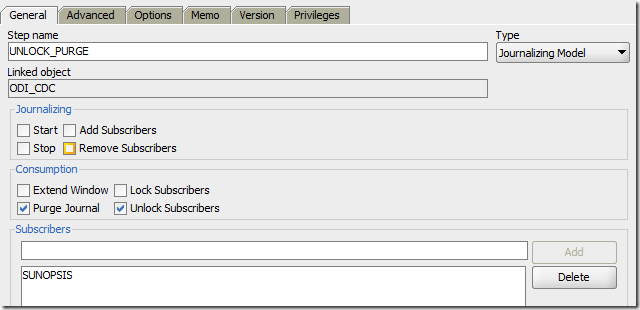

Step 4 – Unlock Subscribers and Purge Journal.

Next steps after the interface drag and drop the model and select Purge Journal and Unlock Subscribers.

Lets test the package . I am inserting another record and let see if the records come through .

Yes data has been inserted into the Target table.

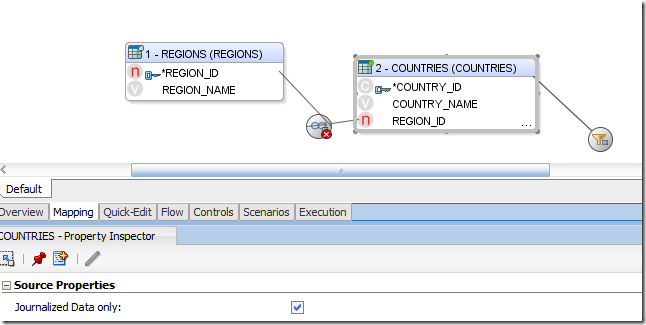

Single Journalized table per interface Limit.

If there are n number of Journalized table in an interface , only one interface can be marked as Journalized data , even if they are consistent based .

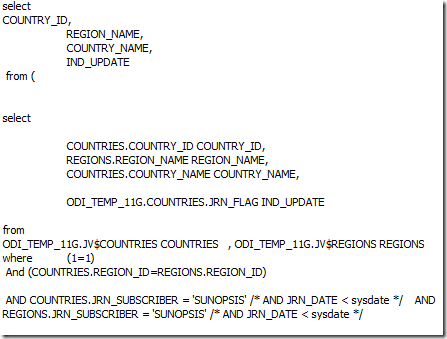

In order to avoid this limit , I have revised the code and re written it like this, so that we can use two journalized table without requiring to read through the complete table of the other source.

Note – There is a big assumption I am making that both the tables namely parent and child are dependent on each other via PK-FK and so if there is an insert in one the of the Parent table then there will be insert into the child table too

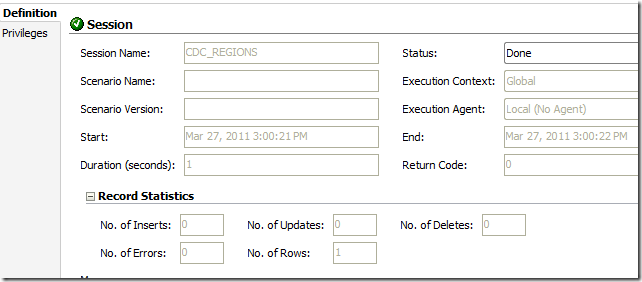

<% if (odiRef.getDataSet(i, "HAS_JRN").equals("1")) { %>

<%=odiRef.getJrnInfo("FULL_TABLE_NAME")%>.JRN_FLAG IND_UPDATE <%} else {%>'I' IND_UPDATE <%}%>

from

<%=odiRef.getSrcTablesList("", "[WORK_SCHEMA].<? if (u0022[IND_JRN]u0022.equals(u00221u0022)){?>JV$[TABLE_NAME] [TABLE_NAME] <?} else {?>[TABLE_NAME] [TABLE_NAME]<?}?> ", " , ", "")%>

where (1=1)

<%=odiRef.getJoin(i)%>

<%=odiRef.getFilter(i)%>

<%=odiRef.getSrcTablesList(" ", "AND [TABLE_NAME].<? if (u0022[IND_JRN]u0022.equals(u00221u0022)){?>JRN_SUBSCRIBER = 'SUNOPSIS' /* AND JRN_DATE < sysdate */ <?} else {?><?}?> ", " ", "")%>

<%=odiRef.getGrpBy(i)%>

<%=odiRef.getHaving(i)%>

<%}%>

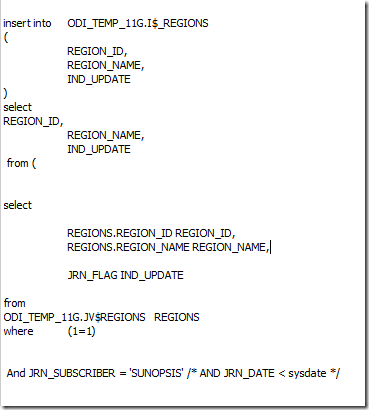

In order to use the above code in any KM.

Mark the parent Datastore with Journalized data store option.

Replace the code JRN_FLAG IND_UPDATE with <%=odiRef.getJrnInfo("FULL_TABLE_NAME")%>.JRN_FLAG IND_UPDATE

so it can use the Parent JRN_FLAG to accordingly drive the target.

Replace the code <%=odiRef.getFrom(i)%> with <%=odiRef.getSrcTablesList("", "[WORK_SCHEMA].<? if (u0022[IND_JRN]u0022.equals(u00221u0022)){?>JV$[TABLE_NAME] [TABLE_NAME] <?} else {?>[TABLE_NAME] [TABLE_NAME]<?}?> ", " , ", "")%>

so if the datastore is journalized then its associated JV$ view is used else the table name

Similarly replace <%=odiRef.getJrnFilter(i)%> with <%=odiRef.getSrcTablesList(" ", "AND [TABLE_NAME].<? if (u0022[IND_JRN]u0022.equals(u00221u0022)){?>JRN_SUBSCRIBER = 'SUNOPSIS' /* AND JRN_DATE < sysdate */ <?} else {?><?}?> ", " ", "")%>

Here for this example i have used the hard coded subscriber name of SUNOPSIS , you can use the getoption and pass the subscriber name in the Interface option.

The main reason for re writing it this way is that , when we have only one table as journalized , ODI have to read through the other child tables even though they are Journalized.

Lets see an example of the above code

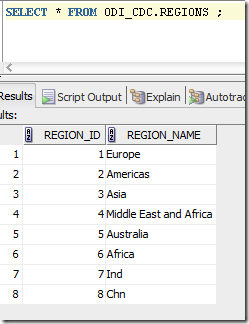

SOURCE

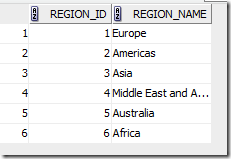

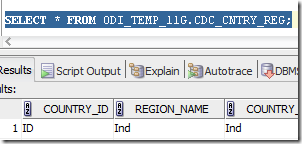

Two new region id is inserts into Regions table with region id 7 and 8

Record is insert with country _id ID and region id 7

Looking at the combination and the Location parent driving then both will be inserted into JV$D views for the respective table.

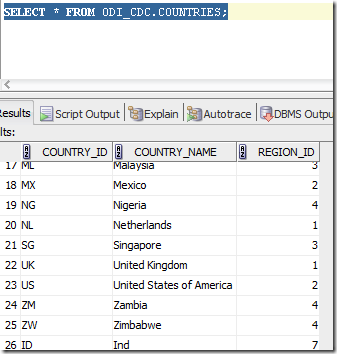

TARGET

As you can see for the country id and region id 7 , the target table has correctly fetched the associated Region name .

Hope this article gives you a better understanding on the CDC Consistent type.

Thank you and please, keep visiting us.

25 Comments

Leave a reply →